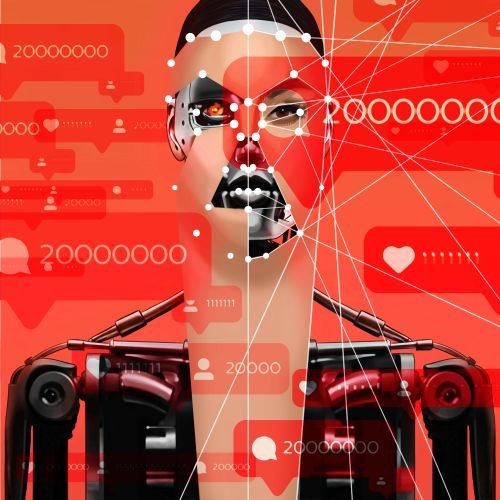

AI and prejudice, manipulation and political assumptions

Experts from e.g. Carnegie Mellon University asked AI language models what their views were, for example, on feminism and democracy, as they wanted to understand the models’ underlying biases and political assumptions. Researchers found, for example, that models trained on left-wing data were more sensitive to hate speech directed at ethnic, religious and sexual minorities in the US. Those trained on right-wing data were more sensitive to hate speech against white Christians. Left-wing language models were better at identifying disinformation from right-wing sources but were less sensitive to disinformation from left-wing sources. Right-wing language models have shown the opposite behaviour.

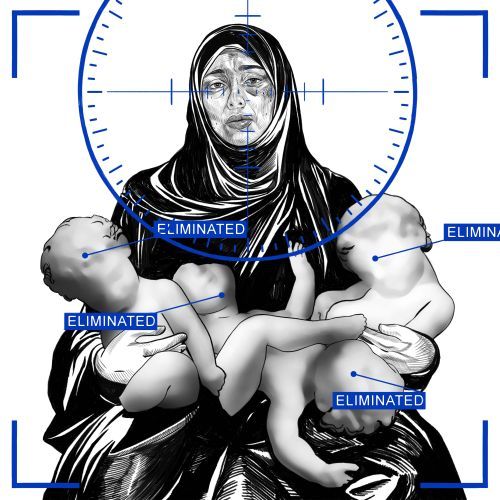

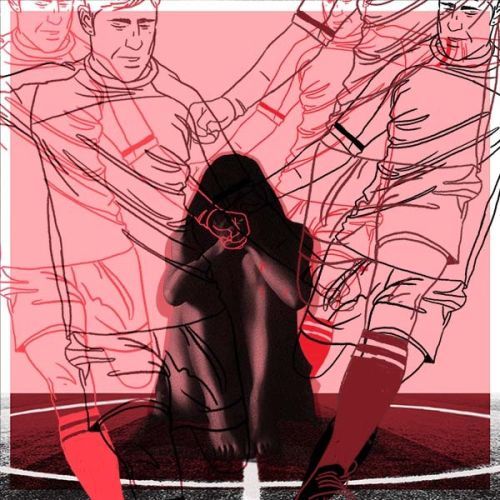

According to Odanga Madung, a journalist and data analyst in Kenya, the disinformation industry easily manipulated the Twitter algorithm to spread propaganda and suppress public dissent. A similar situation took place in Nigeria before the 2023 elections. The TikTok algorithm, on the other hand, provided Kenyans with hundreds of hateful and inflammatory propaganda videos before the 2022 elections. TikTok and Twitter have come under scrutiny over their role in amplifying the hate-filled response to LGBTQ+ minorities in Kenya and Uganda. Social media platforms, including Facebook, have accelerated propaganda spread by microtargeting and avoiding election silence windows. Autocratic governments, which use emotions to polarise societies, are most willing to take advantage of this.