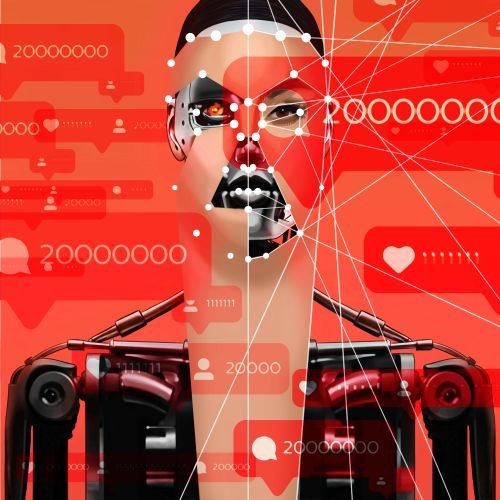

How artificial intelligence fights racism in football

Companies like Go Bubble are introducing AI tools to block offensive comments directed at soccer players on social media. Racism is the most common form of abuse experienced by more than half of players, according to FIFA. FIFA and FIFPRO are using moderation to block racist posts, football organisations are calling for social media companies to be regulated and punished, and activists are emphasising the need for stricter vetting of users.

According to statistics from the last three seasons, racism is the dominant form of social media abuse in English football. It’s a new form of technologically driven racism that runs non-stop and resembles past discrimination. Some teams and athletes choose alternative social media platforms to promote themselves and more ethical behaviour online. These include Striver and PixStory, with nearly one million users, which rank contributors based on the integrity of their posts and aim to create “fair communities.”

Anonymous and acting on behalf of UEFA and anti-discrimination organisations, volunteer observers monitor racist and extremist behaviour in the stands during football matches in Europe. They track banners, cheers and symbols of supporter groups and provide evidence that can be used in disciplinary proceedings against clubs. Observers must be discreet and attentive to avoid detection by extremist groups and make football more inclusive and safe.